Top Advantages and Disadvantages of Hadoop

Big Data has become necessary as industries are growing, the goal is to congregate information and finding hidden facts behind the data. Data defines how industries can improve their activity and affair. A large number of industries are revolving around the data, there is a large amount of data that is gathered and analyzed through various processes with various tools. Hadoop is one of the tools to deal with this huge amount of data as it can easily extract the information from data, Hadoop has its Advantages and Disadvantages while we deal with Big Data.

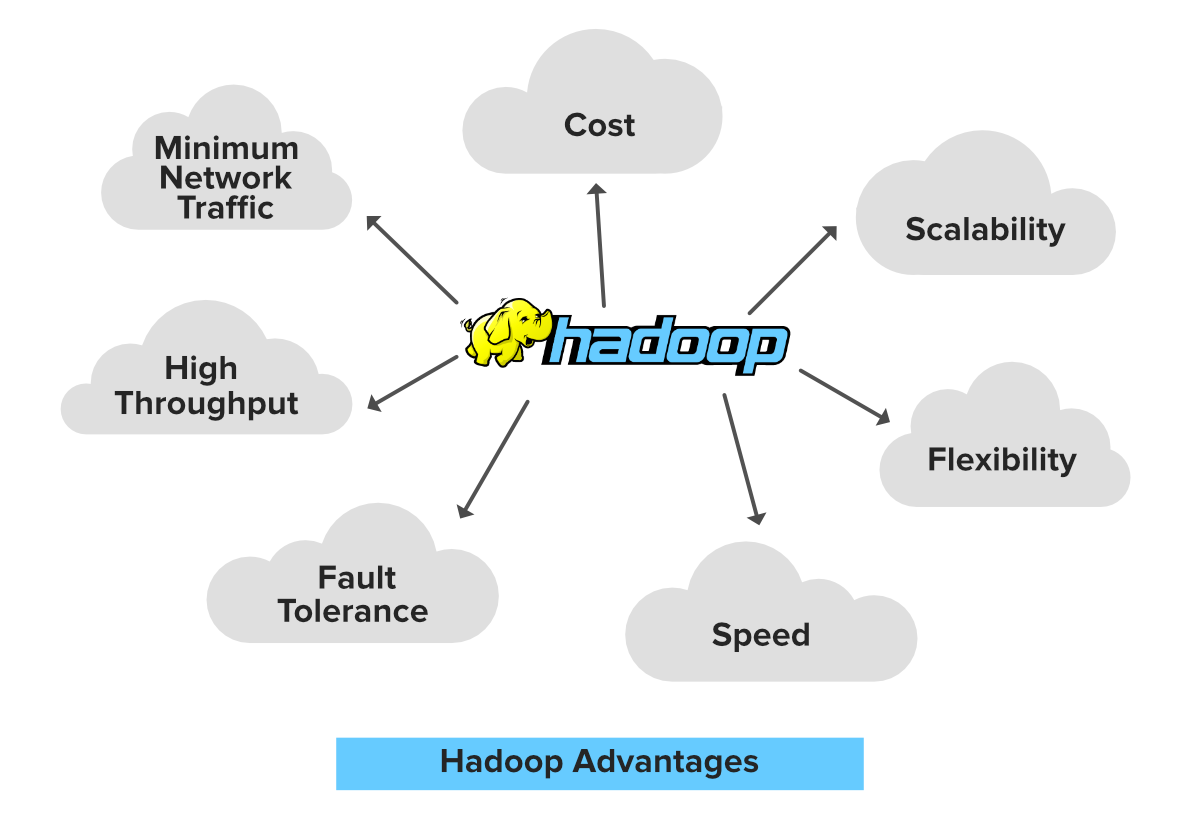

Advantages

1. Cost

Hadoop is open-source and uses cost-effective commodity hardware which provides a cost-efficient model, unlike traditional Relational databases that require expensive hardware and high-end processors to deal with Big Data. The problem with traditional Relational databases is that storing the Massive volume of data is not cost-effective, so the company’s started to remove the Raw data. which may not result in the correct scenario of their business. Means Hadoop provides us 2 main benefits with the cost one is it’s open-source means free to use and the other is that it uses commodity hardware which is also inexpensive.

2. Scalability

Hadoop is a highly scalable model. A large amount of data is divided into multiple inexpensive machines in a cluster which is processed parallelly. the number of these machines or nodes can be increased or decreased as per the enterprise’s requirements. In traditional RDBMS(Relational DataBase Management System) the systems can not be scaled to approach large amounts of data.

3. Flexibility

Hadoop is designed in such a way that it can deal with any kind of dataset like structured(MySql Data), Semi-Structured(XML, JSON), Un-structured (Images and Videos) very efficiently. This means it can easily process any kind of data independent of its structure which makes it highly flexible. which is very much useful for enterprises as they can process large datasets easily, so the businesses can use Hadoop to analyze valuable insights of data from sources like social media, email, etc. with this flexibility Hadoop can be used with log processing, Data Warehousing, Fraud detection, etc.

4. Speed

Hadoop uses a distributed file system to manage its storage i.e. HDFS(Hadoop Distributed File System). In DFS(Distributed File System) a large size file is broken into small size file blocks then distributed among the Nodes available in a Hadoop cluster, as this massive number of file blocks are processed parallelly which makes Hadoop faster, because of which it provides a High-level performance as compared to the traditional DataBase Management Systems. When you are dealing with a large amount of unstructured data speed is an important factor, with Hadoop you can easily access TB’s of data in just a few minutes.

5. Fault Tolerance

Hadoop uses commodity hardware(inexpensive systems) which can be crashed at any moment. In Hadoop data is replicated on various DataNodes in a Hadoop cluster which ensures the availability of data if somehow any of your systems got crashed. You can read all of the data from a single machine if this machine faces a technical issue data can also be read from other nodes in a Hadoop cluster because the data is copied or replicated by default. Hadoop makes 3 copies of each file block and stored it into different nodes.

6. High Throughput

Hadoop works on Distributed file System where various jobs are assigned to various Data node in a cluster, the bar of this data is processed parallelly in the Hadoop cluster which produces high throughput. Throughput is nothing but the task or job done per unit time.

7. Minimum Network Traffic

In Hadoop, each task is divided into various small sub-task which is then assigned to each data node available in the Hadoop cluster. Each data node process a small amount of data which leads to low traffic in a Hadoop cluster.

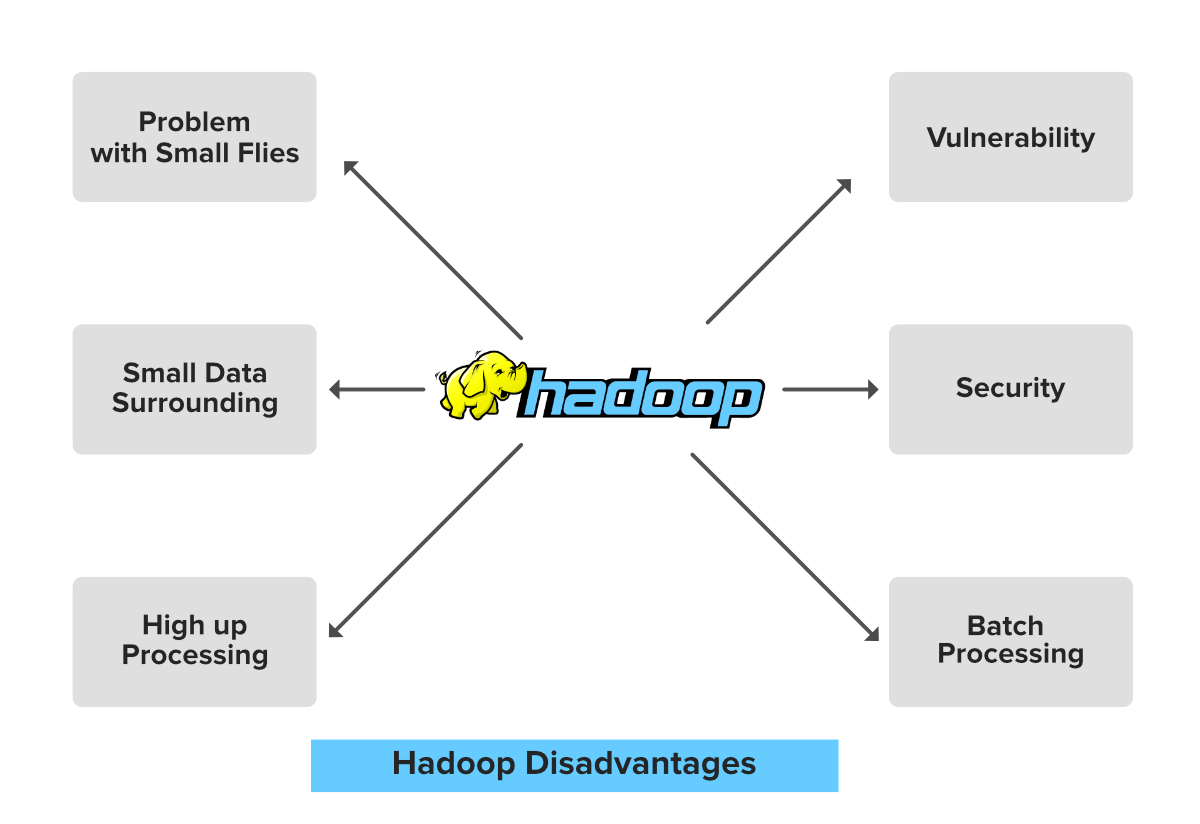

Disadvantages

1. Problem with Small files

Hadoop can efficiently perform over a small number of files of large size. Hadoop stores the file in the form of file blocks which are from 128MB in size(by default) to 256MB. Hadoop fails when it needs to access the small size file in a large amount. This so many small files surcharge the Namenode and make it difficult to work.

2. Vulnerability

Hadoop is a framework that is written in java, and java is one of the most commonly used programming languages which makes it more insecure as it can be easily exploited by any of the cyber-criminal.

3. Low Performance In Small Data Surrounding

Hadoop is mainly designed for dealing with large datasets, so it can be efficiently utilized for the organizations that are generating a massive volume of data. It’s efficiency decreases while performing in small data surroundings.

4. Lack of Security

Data is everything for an organization, by default the security feature in Hadoop is made un-available. So the Data driver needs to be careful with this security face and should take appropriate action on it. Hadoop uses Kerberos for security feature which is not easy to manage. Storage and network encryption are missing in Kerberos which makes us more concerned about it.

5. High Up Processing

Read/Write operation in Hadoop is immoderate since we are dealing with large size data that is in TB or PB. In Hadoop, the data read or write done from the disk which makes it difficult to perform in-memory calculation and lead to processing overhead or High up processing.

6. Supports Only Batch Processing

The batch process is nothing but the processes that are running in the background and does not have any kind of interaction with the user. The engines used for these processes inside the Hadoop core is not that much efficient. Producing the output with low latency is not possible with it.